Research Leaders' Playbook

Tools and tactics for leading coordinated research programs

Table of Contents

Section I: Meta-understanding Coordinated Research

Section II: Running a Coordinated Research Program

-

The Different Roles and Phases of Running a Coordinated Research Program

-

Institutional Moves for Interfacing with Other Organizations

WTF is a Coordinated Research Program?

“Coordinated research program” is a broad term covering a wide range of activities that nevertheless share enough important similarities that we can talk about them as a group. Like most nebulous things, any tight definition would have so many exceptions and caveats as to be useless. So instead, I’ll define a coordinated research program by describing what it is and what it is not so you can start to pattern-match.

Coordinated research programs:

-

Do research work that doesn’t make sense for a single academic lab or startup to tackle for some systemic, institutional reason. There are many reasons why it might not make sense: it might not fit in an academic lab because it requires a lot of repetitive engineering and wouldn’t create publishable results; it might not fit in a startup because it would be hard to capture the value it creates or isn’t creating a sellable product at all. Unfortunately “nobody will fund me to do this” is not a systemic, institutional reason.

-

Have a single, opinionated leader with a lot of control over the program’s direction and actions.

-

Involve more than one person.

-

Are finite and have a precise goal. That is, when you get to the end of a finite amount of time, you know whether you’ve hit your goal or not. Coordinated research programs can certainly lead to other coordinated research programs in order to hit a longer-term goal, but each program needs to have its own clear goals.

-

Very often create public goods. This is generally a big reason that they don’t make a good startup. Some cases are obvious like creating an open-source tool or public dataset. However, there are many gray areas, such as a program that figures out how to scale up a process that a startup then commercializes. The general sense is that these programs should create some kind of positive externality, otherwise they either aren’t worth it or should be funded by investors.

-

Very often involve work being done in more than one organization. Coordinated research programs exist on a spectrum between fully externalized—where the coordinating organization does none of the hands-on research—to fully internalized—where the coordinating organization does all of the hands-on research. In their platonic ideals, ARPA programs are an example of the former and FROs are an example of the latter.

Within these broad characteristics, this playbook is primarily meant for people creating coordinated research programs with a few more characteristics:

-

They are meant to have a broad impact in the world, whether it’s through creating new knowledge, tools, or processes. Coordinated research programs can try to unlock esoteric new knowledge but the way you go about starting and running them is quite different.

-

Have a timeline of ~five years and a budget on the order of ~$10-50 million. The way that one plans for drastically more or less time than five years or raises and deploys drastically more or less than ~$10 million is just very different.

Some examples of coordinated research programs within the scope of this playbook:

-

ARPA programs.

-

Focused Research Organizations.

-

Many of the programs at foundations like The Gates Foundation or The Sloan Foundation.

Some examples of coordinated research programs at the edge of or beyond the scope of this playbook:

-

LIGO (The Laser Interferometer Gravitational-Wave Observatory)

-

The Rockefeller Foundation’s Molecular Biology program

Some examples of things that probably aren’t coordinated research programs:

- Most NSF and NIH programs: Importantly, program officers are often beholden to committees about what they fund and have little ability to change the program’s direction once it has started.

- Most institutes (like the Alan Institute, the Arc Institute, HHMI etc): Importantly, institutes last an indefinite amount of time and often have broad rather than precise goals.

What Ideas Make a Good Coordinated Research Program?

Coordinated research programs can take many forms, but not all ideas – even great research ideas – make a good coordinated research program. It’s impossible to enumerate the exact characteristics of ideas that make a good program: every characteristic would have many exceptions. (It doesn’t help that ‘coordinated research program’ is an umbrella term for a whole class of things which themselves are incredibly nebulous!)

The best we can do is a list of characteristics where the more characteristics an idea can check off, the more likely it is to make a good coordinated research program. We can then go one level deeper and list the characteristics that make an idea particularly well-suited for one type of program or another (e.g. an ARPA program vs. an FRO).

Good coordinated research programs…

-

…have an ambitious goal with a clearly defined scope. Unlike many good research programs, the best coordinated research programs don’t muddle forward while supporting wide-ranging work, even if it’s great work. In part, this is because there are plenty of organizations that run broad research programs and also because a diffuse program can’t get much done during the few years that characterizes a coordinated research program if it is appropriately ambitious.

-

… have some number of people who think that goal might be impossible — and they might be right. Not only is this a good heuristic for ambition, but because one of the most useful things a program can do is to turn the impossible into inevitable, it’s important to have a clear set of people whose minds can be changed.

-

…target high leverage parts of a bigger problem – pieces that, if solved, unlock an order of magnitude improvement in something that matters to people. The ambition of a coordinated research program doesn’t come primarily from the breadth of the problem or proposed work. The ambition comes from the difficulty of the work and the risk that achieving your goal is not scientifically or technically possible.

-

… have a clear argument for why that ambitious goal is possible. It’s easy to lay out an ambitious goal, but hard to lay out clear ambitious goals with clear reasons why it might be possible to hit.

-

… can imaginably get to that ambitious goal in ~five years. A few years is shockingly short for ambitious research, so ideas need to walk a fine line. (There is no structural reason for this five-year timeline, but acting on a much shorter or longer timeline creates a cascade that makes the idea into a very different thing.)

-

… require more than $1M but less than ~$50M to hit those goals. The type of work you need to do to plan and fund work on both the low and high end becomes very different.

-

… focus on neglected problems or very different approaches to existing problems. Coordinated research programs are relatively small in the grand scheme of money spent on research worldwide. On the margin, a program working using a slightly different approach to tackle a problem that many other people are working on will not have much impact.

-

… have a clear ‘theory of change.’ At the end of the day, a coordinated research program can create amazing knowledge or technical capabilities, but it won’t matter unless other individuals or organizations carry it forward after the program ends. In order for that to happen, the program needs a clear idea (that can, of course, change over time) of what happens when the program ends and how to enable that. (Even though many times the impact doesn’t happen the way the theory of change intended!)

What ideas should be a specific type of coordinated research program?

ARPA programs and Focused Research Organizations (FROs) are two of the most legible structures for coordinated research programs, so it’s worth digging into specific characteristics that make an idea particularly well-suited for ARPA programs or FROs.

For the purpose of this discussion, “ARPA program” is shorthand for external research coordinated by an empowered program leader, while “FRO” is shorthand for a time-boxed nonprofit research that primarily does internal research. In reality, “ARPA programs” and “FROS” are nebulous concepts that don’t have a clean line between them. For more on the difference between FROs and ARPA programs, you can also read this piece.

It’s easier to start ARPA programs and FROs because of their institutional legibility but keep in mind that coordinated research programs can take other forms, most of which do not have names!

What ideas make a good ARPA program?

Good ARPA programs…

-

… have work that can be parallelized. Mechanically, ARPA programs consist of a number of different performers working on different projects. This style of work lends itself best to work that can be parallelized, whether it’s different components of a system or different approaches to a problem.

-

…require expertise that lives in existing organizations and would be hard to hire away. Externalized research has the advantage that it doesn’t require researchers to leave their home institution.

-

… have several possible routes to impact. A common theme of ARPA programs is that they make a number of bets not just on approaches to solving the problem but on the way that they’re going to have an impact. For example, the program may produce a technology that is taken up by a large organization or a startup; or maybe it starts a new field of research.

Some examples of good ARPA programs (and why):

ARPANET: The ARPANET program created the network that would eventually become the internet.

It was well-suited to be an ARPA program because:

-

It was justified in terms of an immediate need (robust communication infrastructure) but had the potential to go on to be much more impactful.

-

It involved both grungy engineering problems (building and installing the hardware) and hard research problems (coming up with robust protocols).

-

It required coordinating a number of different organizations – contractors and academic labs – with different expertise.

DARPA Grand Challenge: The DARPA Grand Challenge was a prize competition for a vehicle to autonomously navigate across the California desert.

It was well-suited to be an ARPA program because:

-

It required unconventional funding mechanisms. It was one of the first times the US government offered prizes for a competition.

-

Autonomous driving was a technology that had been worked on for decades but was at the point where a strong push could create a step-change in its capabilities.

-

It shifted perception of autonomous vehicles from something that might never happen to something that was inevitable, leading to tons of follow-on work in large companies and startups.

GPS miniaturization: The original GPS receivers used analog signals and weighed 50 pounds. The MGR program created GPS receivers that were the size of a pack of cards and digitized the system.

It was well-suited to be an ARPA program because:

-

It was impactful work that wasn’t particularly “novel.” (GPS already existed, the challenge was just to make it smaller.)

-

It had a clear but incredibly ambitious goal.

-

It opened the path for many subsequent improvements.

What ideas make a good FRO?

Good FROs…

-

… have a clear goal from day one: whether it’s a dataset, a well defined tool, or something else.

-

… know precisely how they’ll deploy ~$50M early on in the program’s lifetime. This is harder than you think!

-

… have a single project or tight coupling between projects from day one.

-

… have strong conviction about the right approach at the project level early on.

-

… have a core team who are willing to work full-time lined up before starting. An FRO needs to hit the ground running and is initially judged on its core team.

Some examples of good FROs (and why):

E11 Bio: E11 Bio is mapping the entire mouse brain at the cellular level. The organization is creating a dataset and developing technologies that are primarily useful for researchers – a valuable public resource that would be incredibly hard to monetize.

It is well suited for an FRO because:

-

Doing this brain mapping requires a lot of engineering to extend existing technologies and a lot of repetitive but expensive work to slice brains, scan those slices, and analyze them.

-

While all of this work is hard and requires a lot of problem solving, it could be described at a pretty granular level early on.

Cultivarium: Cultivarium creates open-source tools to enable scientists to work with novel microorganisms: things like data on growth rates, protocols for modifying their DNA, computational tools, etc.

It is well suited for an FRO because:

- There’s a vaguely standard set of tools and data you want to create for each microorganism; it just takes a lot of work to create them.

RAND Health Insurance Experiment: The RAND corporation had a lot of clear experimental questions that could only be tested in the context of a health insurance company. So they started their own.

While the concept of an FRO had not been invented yet, it was well suited for an FRO because:

-

It was a time-boxed experiment (most of the work was done in the first five years).

-

It was answering clear questions.

-

It was clear what execution looked like from day one.

What ideas aren’t good fits for coordinated research programs?

Some examples of good ideas that are a poor fit for a coordinated research program:

LIGO: The Laser Interferometer Gravitational Wave Observatory is a large-scale physics experiment and observatory designed to detect cosmic gravitational waves.

It has been incredibly impactful for our knowledge of the universe but would have been a poor coordinated research program for several reasons:

-

LIGO took several decades of prototyping and building. Coordinated research programs generally exist on shorter timescales.

-

LIGO took hundreds of millions of dollars to get to the point where it could take observations. Coordinated research programs are generally tens of millions of dollars.

-

LIGO’s main focus is expanding the scope of human knowledge rather than creating new capabilities.

SpaceX: While it involved a lot of high-risk technology development, SpaceX both had a clear path to profitability and the work to create that technology was best done in a single, tightly-coordinated organization.

NEON: The National Ecological Observatory Network is a program which collects open, standardized ecological data on plants, animals, soil, nutrients, freshwater and the atmosphere at timescales varying from seconds to years from 81 field sites across the U.S.

While planning, building, and running this network requires incredible coordination, addresses a neglected problem, and will have wide-ranging impacts on our understanding and management of changes in climate and ecosystems. It doesn’t fit for several reasons:

-

NEON’s purpose is standardized data collection spanning 30 years or more. The kind of research organization needed to operate consistently and long-term is very different.

-

The risks are primarily in coordination and execution rather than in science or technology.

-

It has many decision-makers and it would be hard to change direction.

-

It took more than $400M just to build the field and lab infrastructure.

How do I operationalize all this?

You can operationalize these characteristics by creating a checklist and making sure that your program idea can tick off all the boxes (or you have a very precise way to get around that requirement).

Identify relevant bottlenecks and risks to make sure your goal focuses your limited resources on the most important challenge.

You may need to figure out in more detail what work needs to be done for the program and by whom before you can determine whether your coordinated research program is the right fit for an FRO, ARPA program, or other institutional structure.

The Mindsets of a Coordinated Research Leader

There are many mindset shifts between most other jobs and leading a coordinated research program. It’s impossible to enumerate all of them, but perhaps the top ten are:

-

Extreme urgency

-

Acting under uncertainty

-

Bias towards action

-

You are not a PI or a Startup CEO

-

Identifying what not how

-

Focusing on bottlenecks and key risks

-

Starting with the end in mind

-

Impact over novelty

-

Turning the impossible into the inevitable

-

If it doesn’t 10x, it’s not worth it

1. Extreme urgency

Most coordinated research efforts, whether they are ARPA programs or FROs, only last around five years. That may seem like a long time, but if you work backwards from an ambitious goal (like showing that it’s possible to make GPS units small enough to fit in someone’s hand when they are currently the size of a shipping container), things need to start happening from day one.

Furthermore, you need to effectively transmit this urgency to those around you: it’s not the normal mode of action for many disciplines or organizations and they will push back.

2. Acting under uncertainty

Acting quickly means making big decisions before you have complete information — not only because you don’t have the time to be 100% sure that an action is the best action, but because when you’re doing ambitious work, it’s often impossible to know what the optimal action is.

Concretely, this means not hesitating before you send that email over whether it’s to the right person, or whether you’ve done enough background research.

3. Bias towards action

If there’s a choice between an action and thinking more about a problem, go for the action.

Concretely, this means that the gap between saying you will do something and doing should be very small. Have that meeting or send that email on Friday afternoon instead of Monday; pick up the phone or visit a lab instead of playing scheduling ping pong; get started on the one project you’re sure about even if others are still up in the air.

4. You are not a PI or a startup CEO

The role of a coordinated research leader can look a lot like a PI of a lab or a CEO of a company but there are several key differences that you always need to keep in mind:

-

Performers and collaborators do not work for you. Almost all coordinated research programs involve working with researchers at other organizations, to varying degrees. With these people, you don’t have the ability to specify exactly what gets done and the feedback loops around the work are looser than if everybody were in the same organization. External work will often be proposed to you, as opposed to you specifying exactly who should do what. Even if you have a specific contract with external collaborators, the only real lever you have is whether to continue or end the contract.

-

You rarely own the results of the program. The goal of a coordinated research program is to get the job done. If it were possible to do that while making money, you would have started a startup; if it were possible to do that as the first author on a paper, you could do it in an academic lab. A program might produce papers, IP, or companies but, if you focus on capturing those, you will hamstring the program’s success. To a large extent, running a coordinated research program is a service position: your job is to enable others.

-

Your goal is not to build an empire. In most situations, your program and position will be temporary. You should not focus on building a legacy or a lasting entity – the job is to reach a finite, concrete goal.

As such, you need to constantly be thinking how you can convince people that their goals align with your goals and create communities and systems that will continue beyond the short few years that your coordinated research program exists.

5. Identifying ‘what’ not ‘how’

Your primary job is to identify what goals and pieces of work need to be done, not how to achieve them. You need to focus on the what and the why and not the how because you are not going to be the expert in all aspects of your program and because research ideas come from unexpected places and evolve over time.

Concretely, that means identifying the key metrics that need to be hit, the interfaces between different projects and outputs, and minimal system architectures but not becoming attached to any particular idea early in the program’s lifetime.

The need to focus on ‘what’ over ‘how’ is especially important the more external researchers you are working with.

6. Focusing on bottlenecks and key risks

Thinking about how to enable technology that currently seems impossible can be overwhelming. One way to cut through the noise is to focus on two (connected) ideas:

-

Bottlenecks — these are the problems/capabilities that, if solved/created, would enable many other people and organizations to move forward on the technology you care about.

-

Key Risks — these are “sticking points” (especially facts about the world) that if true, would make your program’s approach infeasible.

Creating projects to address bottlenecks and key risks is an effective way to move a program forward.

For more on bottlenecks and key risks, see Chapter 7.

7. Starting with the end in mind

It is important to work backwards not just from your program’s goals, but also from the broader technology impact you intend your program to unlock. You might unlock impact by creating a concrete technology or pathway to one, but you might also develop a new understanding of what the actual problem is, so that a future program can solve it.

Planning backwards from desired impact needs to include not just technical steps, but human ones as well: what communities need to be formed, how will technology be adopted, etc.

8. Impact over novelty

It is tempting to focus on doing exciting new things that nobody has done before, but that isn’t the role of a coordinated research leader. Yes, your program will need to do new things, but you should actually do the bare minimum of new things in order to accomplish your goals.

9. Impact happens through other people

More than anything else, a coordinated research program is about changing people’s minds and behavior.

Whether you are creating a dataset, a tool, or a demo, you will need many other people to take action based on your work in order for it to be impactful.

For example, the goal of an ARPA program is to make a specific set of people realize that something that they previously thought was impossible, extremely unlikely, (or didn’t think about it at all) is instead possible and something they should be working on. What that change looks like will depend heavily on your domain and the outcomes you’re hoping to achieve (this is a big reason your job is hard!)

Figuring out what you need others to do based on your program is why the idea of a “theory of change” is particularly important.

10. If it doesn’t 10x, it’s not worth it

One of the core goals of a coordinated research program is to enable work that wouldn’t happen in other organizations because of its high chance of failure. The other side of that coin is that if the program does succeed, it needs to create a reward that was worth that risk. There are plenty of other organizations that will work on incremental improvements or small wins.

A heuristic for what makes a program’s goals ambitious enough is whether, if successful, it will create an “order of magnitude” improvement on how it’s done today. In some cases, order-of-magnitude improvement may be straightforward, such as reducing the size of an instrument or cost of a material by 10x or increasing the maximum power output of a system by 10x.

In other situations, “order of magnitude” improvement is fuzzier. When the improvement isn’t easily quantifiable, it needs to result in a step-change, enabling things that were previously impossible, such as getting autonomous cars to finish a course that nobody could previously finish, or networking together multiple computers from thousands of miles apart in a scalable way.

Always think about multiple timescales and priorities simultaneously.

You need to think about making concrete personal progress on the timescale of a week, technical program goals on the timescale of a year, and broader impacts on the timescale of a decade. At the same time, you need to consider all at once the incentives of researchers, ambitious (but not impossible) technical goals, how to make the work as general purpose as possible while still being pragmatic, and how the technology is going to get into the world.

The questions you should be obsessed with:

-

What research will not happen under the current system? Some ways to break that question down is to ask what work is:

-

Too weird for most government funders?

-

Too engineering-heavy/not-novel/coordination-heavy for one or more labs to self-organize around it?

-

Too researchy/high-uncertainty for a startup?

-

Too unaligned with current paradigms for a big company to take it seriously?

-

-

If something would be incredible if it worked, why hasn’t it been done yet? Answer with as much specificity as possible.

-

Where are the weirdos? It’s easy to gravitate to the people doing good work at top labs, but they are often well-funded already and good at pushing out their ideas. Think outside of the usual big cities. (Of course, often the best people are the best for clear reasons and sometimes they’re still unable to work on something important).

-

What happens after this program? While we’re not in the business of developing products, we are in the business of unlocking impactful technologies. In order for technologies to have an impact, they need to be carried forward by people, regardless of whether that happens in the form of a further research program (with its own theory of change), a nonprofit organization, a community using open-source technology, a startup, companies incorporating it into their own product line, or something else. DARPA has a mixed record on transitioning in part because it rarely thinks about what happens after a program ends until the program is almost over.

-

What are fast, clever experiments that could answer crux-like questions about risks or possibilities? When doing something other people think might be impossible, you want to begin answering key questions as soon as possible. Early derisking projects help focus a program and can help you sell your program to more funders, collaborators, and transition partners.

The Different Roles and Phases of Running a Coordinated Research Program

The leader of a coordinated research program needs to wear many hats. This is one of the reasons why the job is so hard! These hats roughly correspond to the different phases of a program. Leading a program through each phase is almost a different job.

The phases are:

It’s important to note that these phases are not rigidly sequential. They bleed into each other and you need to work on several at the same time: it’s important to consider tech transition even while doing exploration and field mapping — keeping your eyes out for who will shepherd the technology beyond your program and what those people care about; you will need to update both your program design in real time as you execute on it and run into previously unknown unknowns; you should have a communicable vision from day one and update it as you explore and refine ideas.

1. Exploration and Field Mapping

The goals of exploration and field mapping are to:

-

Have a working knowledge and network of the people and organizations working adjacent to your program’s goal.

-

Understand current practice well enough that you can evaluate people and ideas: who is good; who is moving slowly because they are slacking and who is merely working on something incredibly hard; whether you are asking performers to do something impossible or merely very hard.

-

Understand the limitations of current practice well enough that you can go several layers deep and identify unintuitive gaps or bottlenecks (see Chapter 7, “Identifying bottlenecks and key risks”).

Your role here is similar to a startup founder doing customer exploration.

You probably come into a program with a hunch. If you don’t, create one. Exploration without an initial hunch to direct your search is far less effective. The point of a coordinated research program is to reach a goal. It’s tempting (and a commendable urge) to leave yourself completely open to serendipitous goals. It’s counterintuitive, but having a goal makes it more likely to find different goals because you have something to compare to and contrast against.

Field mapping is the process of figuring out the state of a “field” — the rough interconnected ideas. Who is working on what? What things are not being worked on? Why?

Some concrete tactics for exploration and field mapping:

-

Creating a list of research programs that have attempted something similar in the past and talking to the people who ran those projects. (If you think that nothing like what you’re thinking about doing has been done, you’re wrong.)

-

Creating a list of 100 researchers and companies that seem to be working in or peripheral to your goal and talk to them.

-

Reading a few review papers in order to identify people who might be good initial nodes in a network to reach out to is a reasonable starting point,

-

Writing down intermediate syntheses, hypotheses and conclusions from conversations in long-form text. Long-form writing (as opposed to bullet points) is important because it enables you to create causal connections between different things that you’re exploring and to notice unintuitive gaps or consistent blockers, both of which can be fruitful things for a program to go after. Update these notes as you learn more. Linked notes tools like Bear or Obsidian can be useful for this, but Google Docs, paper, or the built-in notes tools in Windows or Mac are fine — whatever works best for your brain.

All of these require navigating a network (Chapter 6).

It’s tempting to do an exhaustive search of all potential people and ideas in a space, but this is a bad idea. This phase of the program should be as short as possible, but no shorter! A good heuristic for when you’ve done enough exploration and field mapping is when your network exploration starts to close loops. When you’ve fully explored a space, you’ll start getting pointed back to the same things you’ve already dug into.

Related chapters:

-

Identifying who cares (Chapter 5)

-

Navigating a network (Chapter 6)

-

Identifying performers (Chapter 13)

2. Refining Ideas

The goal of refining ideas is to identify:

-

A concrete program goal that is both incredibly aggressive and potentially achievable within the program’s lifetime.

-

Milestones that would suggest the program is moving towards that goal.

-

The different thrusts that a program would need to address that goal.

-

The key risks to the program (see Chapter 7, “Identifying bottlenecks and key risks”) and what evidence would suggest that you have mitigated them.

Refining ideas requires both thinking and talking. You need deep thinking to connect and distill the pieces of information that you’ve gathered while exploring and field mapping, and then you need to talk to people to test those ideas against the world.

Your role here is an analyst and researcher.

Refining ideas should happen in a loop with exploration. Some conversations generate an idea for an impactful sub-goal and then running that idea by those same experts (or different ones) can give you more unintuitive nuance on it. Remember, people default to talking about higher levels of abstraction so it’s up to you to make the ideas more precise.

Some concrete tactics for refining ideas:

-

Running workshops

-

Requests for information

-

Circling back with people you talked to during exploration with a concrete hypothesis for them to react to

Related chapters:

-

What ideas make a good coordinated research program? (Chapter 2)

-

Identifying bottlenecks and key risks (Chapter 7)

3. Program Design

The goal of program design is to create the structure of a program:

-

What work actually needs to be done to achieve the program’s goals?

-

What kind of projects are best suited to encourage that work?

-

How do those projects need to interact?

-

What needs to happen at the end of a program so that it can have impact? Working backwards, what do you need to put in place during the course of the program so that can happen?

Your role here is a strategist, laying out the troop movements.

Some examples:

-

You might determine that your program’s goal can be achieved with widely-available resources combined with a hard-to-create dataset. In this situation, a good program design might consist of one or more projects to create the dataset combined with a competition. (This is roughly the shape of the Vesuvius Challenge) Those projects and the competition need their own design (how do you know they’re successful, what timelines do they happen on, how will the competition be run and managed?)

-

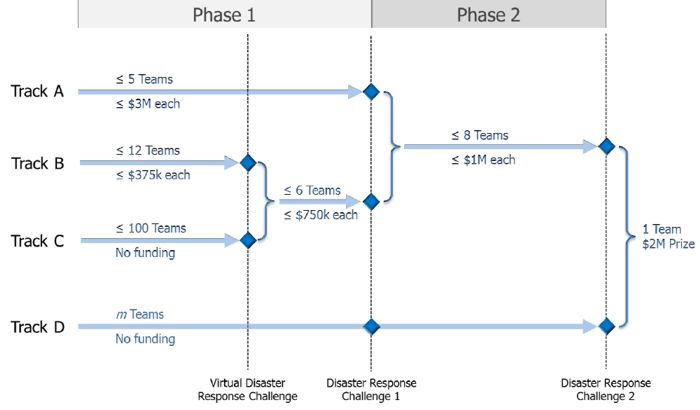

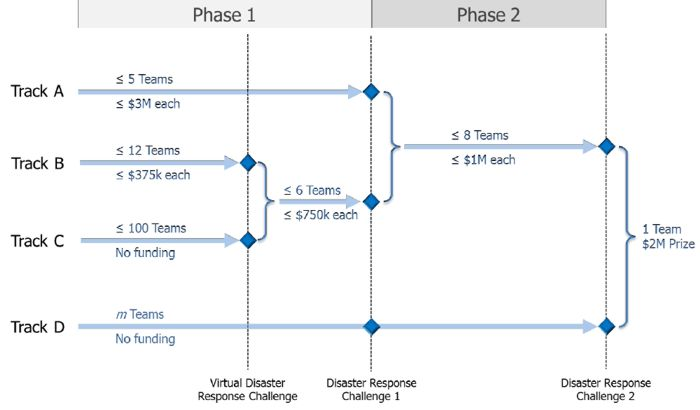

You might determine that there are two very different approaches that could potentially achieve the program’s goal. One approach is at a higher Technology Readiness Level (TRL) but faces a lot of scaling risks, while the other is lower TRL but will clearly scale if it addresses early risks. In this situation, a good program design might consist of one track that funds startups working on the high TRL approach and another track funding labs working on components of the lower TRL approach. The existence of the program will make sure that the labs coordinate towards a coherent output and that if the startups fail to scale the higher TRL approach, they might be able to adopt the other approach which the labs have matured.

Concrete tactics for program design:

-

Writing down the ideal end state for the program and a causal narrative in as much detail as possible for how that would feasibly happen.

-

Creating block diagrams.

-

Putting out requests for proposals.

Related chapters:

-

Planning for transition (Chapter 9)

-

Program failure modes (Chapter 10)

-

Mapping incentives (Chapter 11)

4. Communicating Vision

The only way your program will be successful is if you are able to convince and align several different groups:

-

Great collaborators (performers, employees, etc.)

-

The people holding the purse strings

-

Whoever is going to carry the technology on beyond your program

Your role here is to be a keynote speaker, a general rallying your troops.

In order to get any groups on board with the program’s vision, you need to have a clear idea of what it is. (This goes back to creating concrete goals.)

Communicating the program’s vision to collaborators is critical because:

-

People who feel like they’re working towards a shared vision do better work.

-

In part, your program will succeed because people will continue to work towards its visions even after the program ends.

-

Even collaborators who are paying lip service to a vision because you can throw around serious chunks of money need to understand it to be effective. There are so many small decisions that can’t be captured by metrics that collaborators will fail to do good work (and you might have to pull their funding) if they don’t know where that work is targeted.

Concrete tactics for communicating vision:

-

Always have an up-to-date…

-

One or two sentence blurb that you (or people who are making ‘double-opt-in intros’) can use in emails.

-

One-to-two-page document that explains the current working ideas of your program.

-

Deck of slides that you can go to in order to illustrate specific ideas in your program that are easier to communicate visually.

-

-

After every conversation you have, write down:

-

Questions or points of confusion that came up

-

Which specific things you said that resonated or fell flat

-

Points of regret or things you could have explained better

-

-

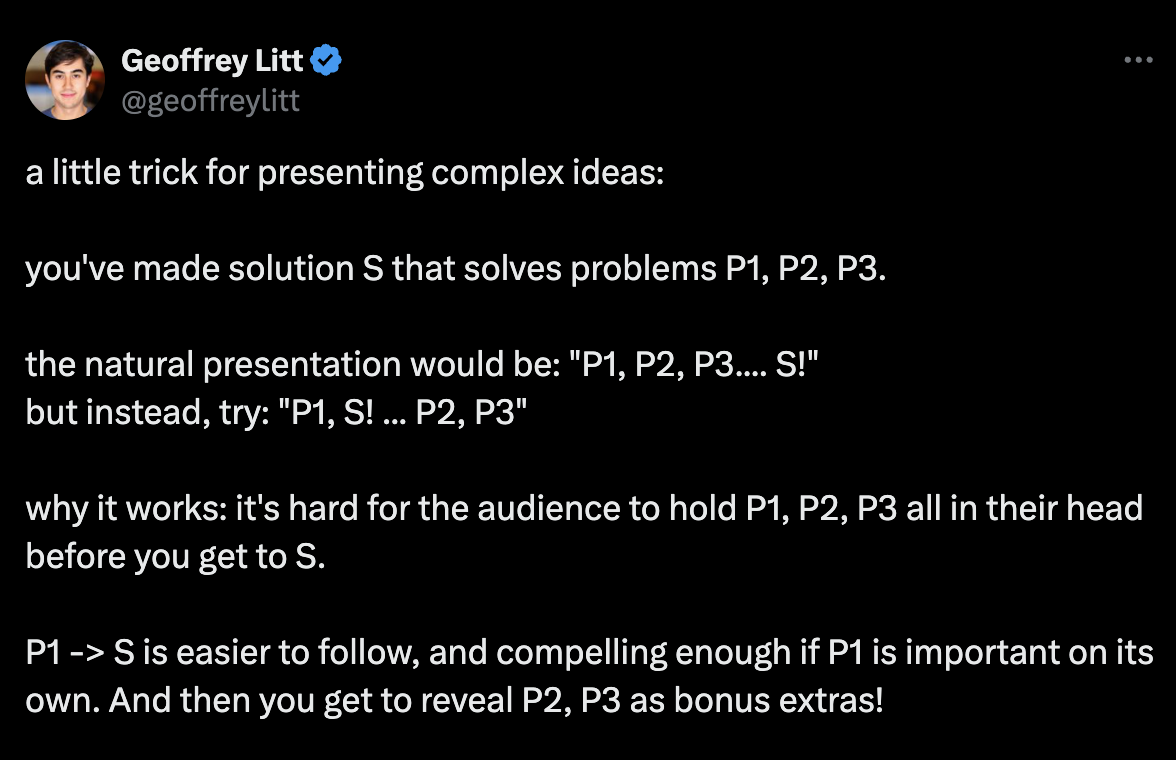

Pay attention to the Pyramid Principle — start with the bottom line of the program up front “we’re working on tools to 10x the production of X” and then go into details.

-

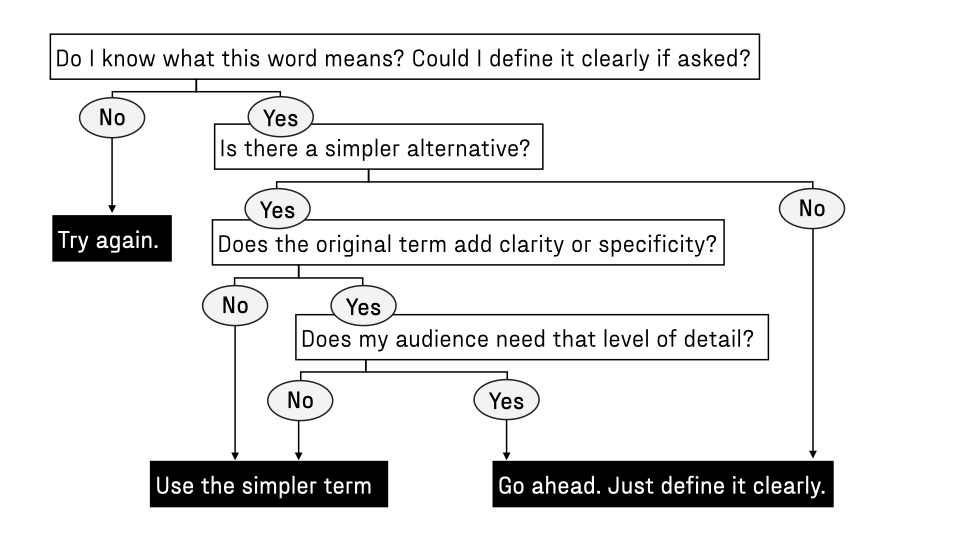

Simplify your language as much as possible, but no further. Even technical people secretly like straightforward explanations and you will need to communicate across a number of disciplines. AI tools like LLMs can help with this.

-

Start with concrete examples and stories and then work your way to abstractions.

Related chapters:

-

Selling a program (Chapter 12)

-

Communicating your vision (Chapter 14)

5. Program Engineering

Once you have a precise program design and your program is funded, you need to get into the weeds of who is doing what. This means soliciting proposals, negotiating contracts, and generally putting rubber to the road.

Your role here is a tactician, a salesperson, and a contract lawyer.

Ultimately, no plan survives first contact with reality intact. You will have to constantly adjust your program design based on who you can get on board and what their demands are once you start getting into specifics.

In this phase, it really pays to understand the nitty-gritties of the processes in your specific organization: what kind of contracts you can write, what the process looks like, and who is in charge of it. Doing this will allow you to have as much freedom and power as you can without getting sued, fired, or going to prison.

It’s hard to give generic, concrete advice at this stage, but some meta-concrete advice:

-

Do the legwork to understand the specific constraints in your organization on what sort of contracts you can write.

-

Always keep planning for transition in mind, especially around IP.

-

Make friends with your legal team!

-

Read the fine print.

-

Everything is negotiable. Many organizations may say “this is how we do things. Full stop” but given the right incentives, that is never the case.

-

Negotiating contracts always takes longer than you expect.

-

Keep in mind that nothing is a done deal until the legal contract is signed and the money is out the door. (And even then, things can go south.)

The classic book out there on negotiation is Never Split the Difference by Chris Voss & Tahl Raz, which is well worth reading before you get to this phase of your program.

Related chapters:

-

Institutional moves (Chapter 16)

-

Identifying performers (Chapter 13)

-

Mapping incentives (Chapter 11)

-

Knowing when to quit and setting up kill criteria (Chapter 17)

-

Planning for transition (Chapter 9)

-

Metrics (Chapter 18)

-

Budgeting for a coordinated research program (Chapter 15)

6. Program Execution

Program execution is when the “actual” research happens: contracts are signed, money is out the door, and researchers are measuring, mixing, tinkering. Ironically, as the program leader there will not be as many things for you to do on a daily basis.

Your role here is as a project manager and CEO.

Treating projects within the program as “fire and forget” is often a mistake for several reasons.

-

Work expands to fill the time allotted to it. Without tight feedback loops with people doing the hands-on work, whether they are external collaborators or employees, things will often take longer in a compounding way. This isn’t to say you should be breathing down their necks, but it’s important to touch base regularly.

-

Success will often require making adjustments to the program over time. The more in the loop you are, the smaller those adjustments need to be to keep things from going off the rails. There is a tension here because you also don’t want to micromanage.

-

Some of the power of a coordinated research program comes from cross-pollination between the different projects. It takes work to make sure that they are talking to each other regularly.

(Of course, the relative importance of these different factors depend on what sort of program you have and what kind of program leader you are.)

Some concrete tactics:

-

Have regular in-person check-ins with performers. It’s one thing for people to say “everything is fine” on the phone but a very different thing when you can see the lab.

-

Have gatherings for any external collaborators with plenty of unstructured time.

-

Be willing to kill projects. It’s incredibly hard, but can free up resources for other work.

-

Be willing to double down on projects that are going very well.

Related chapters:

-

How to write a good solicitation (Chapter 19)

-

Knowing when to quit and setting up kill criteria (Chapter 17)

-

Program failure modes (Chapter 10)

-

Planning for transition (Chapter 9)

7. Tech Transition

In order for a program to have an impact, the technology needs to transition beyond the program. “Transition” can mean many things: continuing as an active area of research in other organizations; being incorporated into different products; spawning one or more startups; becoming an open source project; and many others.

Your role here is a business development person and advocate.

You need to start planning for this phase of the program long before the end of the program.

Realize that technology ultimately lives in people’s heads — the more people who worked on the technology as part of the program you can enable to continue working on it afterwards, the more likely it is to succeed.

Ultimately, you need to give up control over something that has been your baby for years.

You can see concrete tactics in Chapter 9, “Planning for transition.”

Related chapters:

- Planning for transition (Chapter 9)

The adventure begins

This playbook is a hopefully useful piece in a bigger toolkit that can enable you to lead amazing research programs. You can (and should!) complement this playbook with two other resources. First, do deep dives on the stories of previous programs – both successes and failures – that bear a family resemblance to what you’re setting out to do. Second, find people who have done similar things before and talk to them about what you’re trying to do, ideally more than once. Successfully leading a program requires many different hats at the same time. Some of these roles will inevitably be in tension with one another. The skill of being a program leader is knowing which tools and approaches to prioritize and when. Some of that can come from external sources, but at the end of the day, the responsibility of making that call rests on your shoulders. Good luck!

Identifying Who Cares

One of the hardest questions of the “Heilmeier Catechism” is the first half of question number four: “Who cares?”

Answering “Who cares?” is tricky because answering it directly doesn’t get at the idea behind the question that will help your program succeed. The initial answer is often along the lines of “a few researchers.” Most people are not invested in things that they think are impossible and, if your program is genuinely trying to turn the impossible into the inevitable, most people beyond a few diehards will not care.

A more useful framing for this question is: “Who will care about the program’s results if it was successful? And what difference will it make to them both short term and long-term?” Or put differently: “Who will see the output of the program and think ‘Ah! That could be valuable to me.’ and ideally imagine a trajectory where it achieves that?”

Pragmatically, “who cares?” is about the program’s output because the technology needs a home at the end of a program. The world is littered with the cold, stillborn bodies of promising technologies that nobody cared about enough to nurture until they could survive on their own. That home could be as humble as a nonprofit, a smattering of labs with funding from other organizations, or startups that still need to do a lot of work. But in order for even those things to happen, both people doing the work and people who will fund those homes need some promising evidence that they’ll be able to achieve their goal. One of a program’s jobs is to give them that evidence. “Who cares?” tells you who that evidence is for, and therefore what that evidence needs to be.

For better or worse, every program needs a narrative about why it is worth deploying scarce resources of time and money on: this is what will get it funded, align people who are working on it, and act as a discriminator on how to prioritize work. One of the most powerful narrative structures is: “if we can do X, it will remove bottleneck Y, which will enable Z.”

One framing for a program is that “a particular group would do something drastically differently if these specific technical things were different.” When we talk about “who cares,” we’re talking about that particular group.

Figuring out who cares is extremely hard! Answering that question well is a big chunk of program design.

Pragmatically, how do you figure out who cares?

In order to answer the question, you need to talk to a lot of people. There are, of course, exceptions if you’re building the thing for a broader group you’re part of, but this situation is rare. Building a thing because you think it’s cool or important doesn’t count as “building a thing for a broader group you’re part of” — you need to be building it because the group will want to use it to do something they find valuable.

Before talking to anyone, you should have a hypothesis about a group and what they might find useful. (Eg. People who build membranes care about having better control over microscale pore structure). You shouldn’t come out of the gate and ask about it directly – be intensely curious about their context – but it’s important to have some anchor in your head to bias your line of questioning. Otherwise, conversations tend to stay incredibly abstract and not particularly useful.

Start by simply trying to understand what the constraints on their work are and what they care about. Ideally you lead them as little as possible, but you do need to give some framing about why you’re asking questions — eg. “I’m working on a program to explore X” and maybe “I have a hypothesis that Y.” If you’re lucky, they’ll riff off of that, but most of the time they won’t.

Some things to keep in mind:

-

These people often don’t care how the technology you’re working on works as long as it stays within their constraints.

-

You should never ask people “what do you want?” If you ask “what would you care about?” They will never give you a good answer, a la the supposed Henry Ford quote “if I asked people what they wanted, they would tell me ‘a faster horse.’”

-

You should rarely even ask “would you care about <this specific program output>?” Because people won’t tell you the truth: either they’ll say yes to make you feel good or “no” because they have no imagination. You need to come at it sideways.

-

The trick is to iterate on hypotheses until you have something nuanced and precise that will stand up to scrutiny.

Note: This is secretly all startup customer discovery advice

Related Resources:

Navigating a Network

A large part of designing a program involves navigating networks that you may not be part of and creating new ones.

What are the goals of these networks?

-

Finding potential collaborators – employees, performers, or others

-

Finding potential “customers” for the program

-

Unearthing unexpected directions

-

Understanding what different communities think about your program

-

Identifying bottlenecks and key risks

Depending on what you are looking for, you’ll want to talk to people in different positions:

-

If you are looking for external collaborators, aim to talk to decision-makers, whether it’s a PI of a lab or someone with equivalent authority in a company. It’s easier to get in touch with postdocs/staff researchers and often they will be more excited and even have better ideas. However, to create performers, you need to get people with decision-making authority on board. (Of course, excited non-decision-makers can connect you to decision-makers.)

-

Similarly, if you are looking for potential “customers,” you want to talk to decision makers who could affect the technology’s ability to move forward.

-

If you are looking for bottlenecks and risks, you’ll want to talk to a wide array of people who have been around a subject for a while. Most important bottlenecks and risks around technologies only make themselves apparent with sufficient context and experience. However, you don’t want to take any one person’s view on it because it’s easy for single experiences to determine what someone thinks is feasible.

Whether or not you are part of a network primarily affects how you begin working your way through the network:

-

When you are already part of a network: Start with the people you have the most social capital with — people who you can ask dumb questions of and who are the most likely to be generative.

-

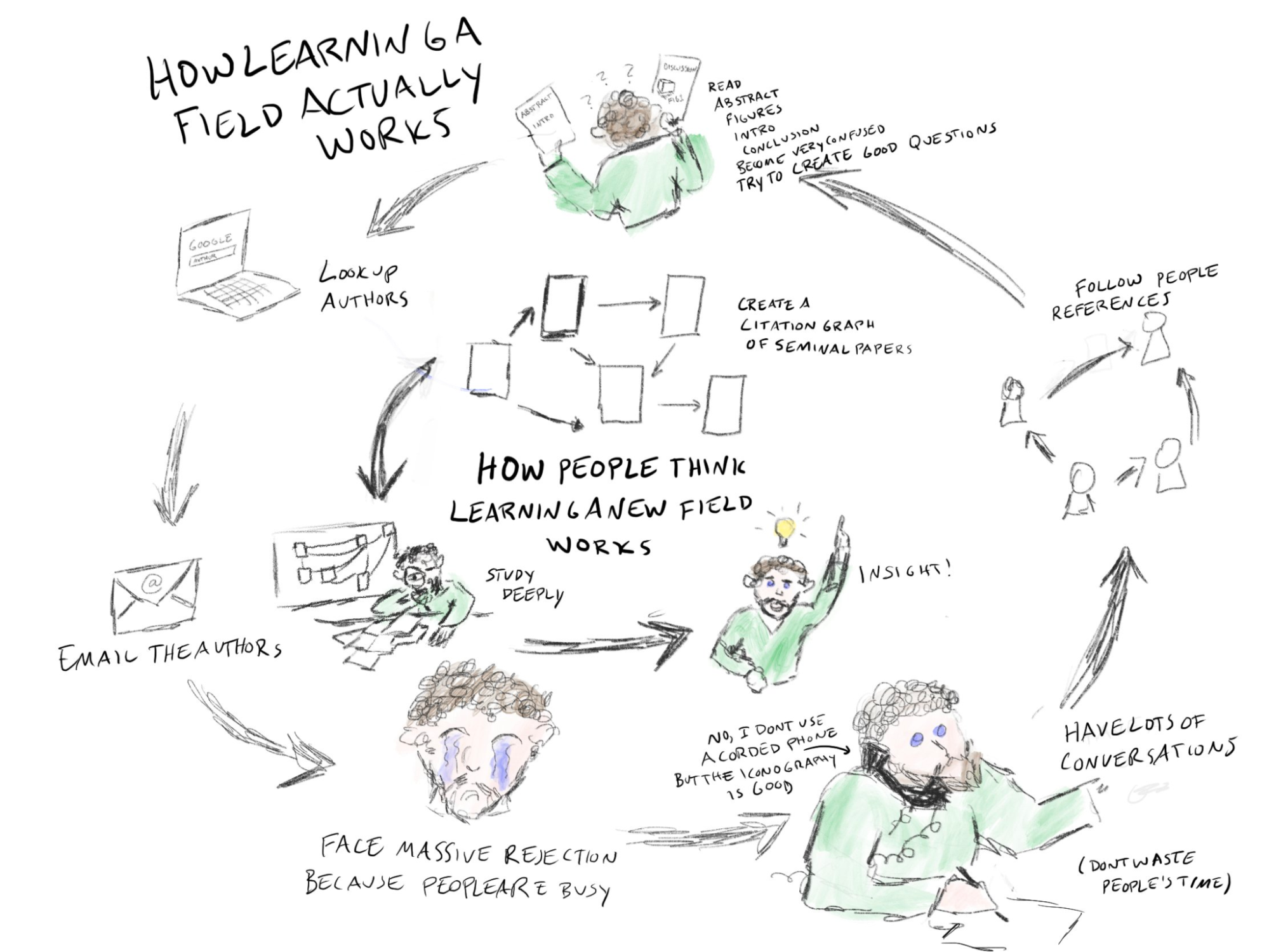

When you are not already part of a network: Before diving into a network that you’re not yet part of, it’s helpful to read some literature (blog posts, papers, parts of books) just to get an anchor point. These will probably be the wrong resources but it gives you a starting point for both people and questions. (Don’t spend too long on the literature! It’s the sort of thing you can spend infinite time on.) Once you have some initial questions, reach out to corresponding authors on papers that seem particularly relevant and go to conferences to just wander around and talk to people.

It will be tempting to talk to the highest-status people in a network — they will be the most salient both from the literature and from people’s recommendations. However, there’s a strong correlation between individuals being high-status and having their own strong agendas.

Keep in mind that you don’t need to win over everybody — you just need to find the people who are potential allies. Most people you will talk to won’t be useful.

You do not need to do everything that everybody you talk to recommends — this would take much more time than you have and you’ll likely get contradictory advice. There is a skill to sorting through which pointers — people and resources — are worth your time.

Something to watch out for is that it’s easy to over-index on what academic researchers think is important and possible. There are several reasons for this: academics write the vast majority of papers, which are good starting points for digging into an area; they’re generally more open to talking; and their emails are just easier to find! However, academic research priorities and challenges are often different from the ones you need to tackle to have a large impact (unless you’re simply trying to advance an academic field).

General Tactics

There are several concrete tactics that apply to navigating any network, regardless of whether you are part of it or not:

-

Always have some “hypotheses” that you’re gathering evidence for or against as a way of directing your network navigation. These are hypotheses about your program – what’s important, specifics of what you should focus on, theories of change, etc.

-

Take advantage of LinkedIn’s 2nd degree connection feature to figure out who you know who might know a person you’re trying to talk to.

-

Reach out early, reach out often. You need to reach out to many more people than you think you do. For every good conversation, you will have many bad conversations and even more ignored emails.

-

Always follow up. Just because someone didn’t respond, doesn’t mean they don’t want to talk to you. People are just busy. I generally follow up once per week until I’ve followed up three times or they have explicitly told me they’re not interested.

-

Pay attention to institutional affiliations — companies, universities, specific labs and teams — and when people were at that institution to see whether they might be able to connect you to a target.

-

Pay attention to coauthors for the same reason.

-

Use some kind of software to track people. You will ideally be talking to far more people than you can keep in your head. This tracking software can be as simple as a spreadsheet. Other options include Airtable, Folk[1], Notion, Hubspot to name a few. None of these is the “best” – the best tool is the one you’ll use.

-

It’s generally harder to talk to people at non-academic organizations (companies, the government, etc), but it’s incredibly important! Some tactics for getting to folks:

-

Talk to former employees: they usually know people who are still there and may also be able to tell you the information you’re looking for.

-

If you want to talk to a startup about their technology it’s often more productive to talk to people who worked for a failed startup in the same space.

-

Organizations often have standard formats for their emails – if you can figure that out (there are services online that help) you can cold email people.

-

Reaching out to people on Linkedin has a low hit rate but is worthwhile.

-

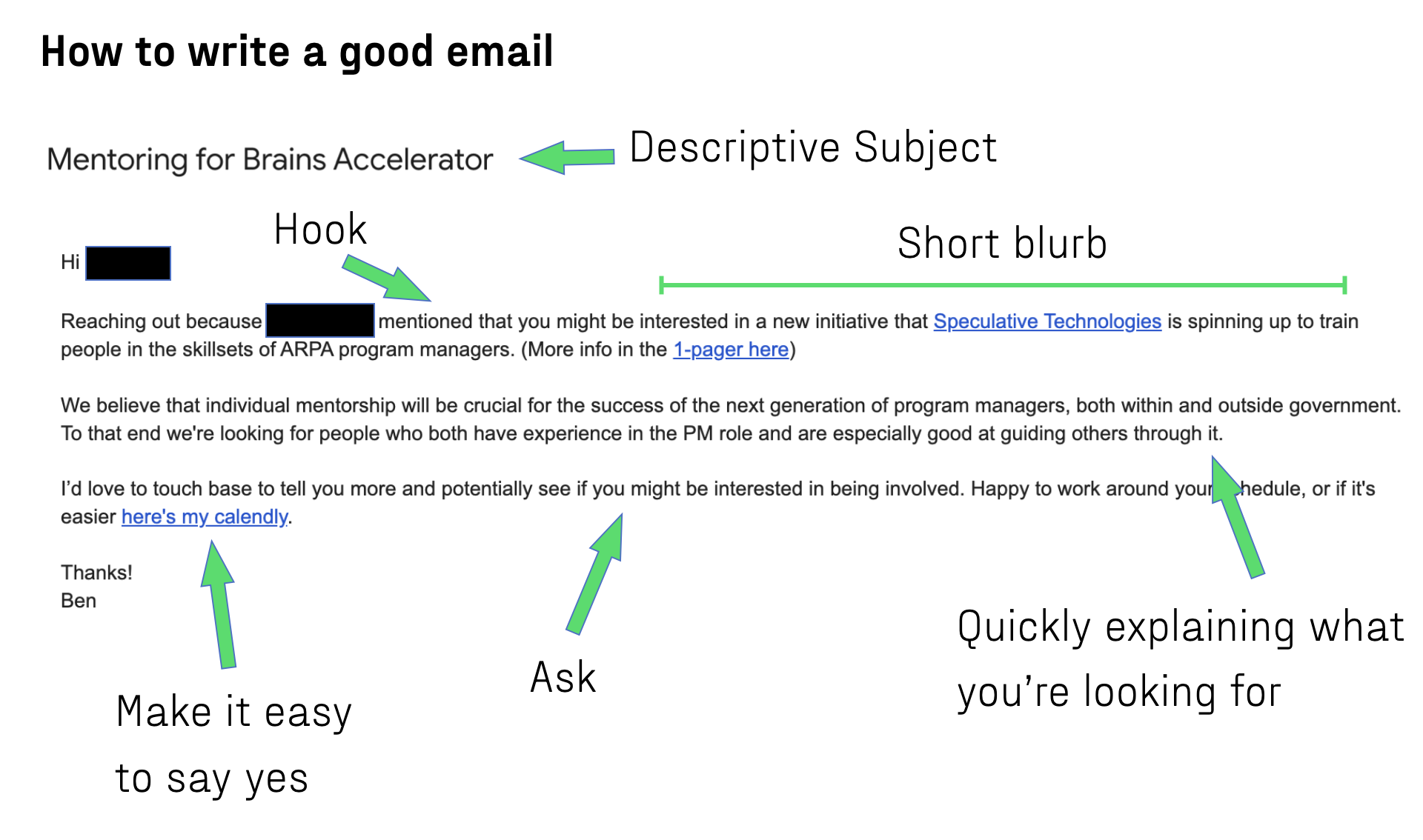

Email Tactics

-

Having a good blurb about who you are and what you’re doing is critical. A good blurb is short (at most three sentences) and gives the most important information up front. Ideally it’s customized to your target’s interest. (Here are some examples of blurbs).

-

Employ the double opt-in intro when possible (really do read this if you haven’t heard the term before).

Conversation Tactics

-

Do some research on people before you talk to them: read their CV, skim the abstracts of their papers, look at their website. Doing your research lets you ask much better questions and flatters people—making them more likely to help you.

-

While you do want to leave room for serendipity, it’s important to have concrete questions going into every conversation. Most people will default to talking about higher levels of abstraction but lower ones are more useful. Additionally, concrete questions are better hooks to get people to talk to you in the first place. Ideally, people would understand what you’re going for and summon ideas for other people you should talk to and useful ideas you should pursue.

-

In every conversation, make sure you ask “who else should I talk to?” (Ideally, this question should be about specific things that came up in the conversation.)

-

If they recommend people you should talk to, make sure to ask, “Can you make those introductions?”

-

Follow up conversations with a thank you, a short recap of what you talked about (in case you remembered something wrong or they have additional thoughts), and a list of the people who they are going to introduce you to.

How do you know when to stop?

You can do this kind of network exploration forever, but because you need extreme urgency and a bias towards action, at some point you need to decide that you’ve talked to enough people (of course you should continue talking to people, but it needs to become a back burner activity). Three ways to make this call are:

-

Pay attention to your learning rate. When you notice that you tend to hear the same things in each conversation, it’s not as necessary to have more conversations.

-

Pay attention to people-loops closing. When the people you’re talking to tend to recommend talking to the same set of people that you’ve already talked to, you may have exhausted the experts in an area. (Of course there could be a disconnected cluster of people).

-

Time boxes. Give yourself clear deadlines to stop being in full conversation exploration mode. Frankly, this is probably the most straightforward and pragmatic approach.

Related Resources:

Identifying Bottlenecks and Key Risks

There are an infinite number of things one can do to advance ambitious technology. Identifying bottlenecks and key risks is a powerful way of cutting through the noise to ensure you’re working towards a high-impact goal, prioritize your program’s research activities, and to sell ideas to stakeholders.

Bottlenecks and key risks are nebulous concepts: neither has hard boundaries that define what does or does not “count.” To give rough definitions:

-

Bottlenecks are the problems/capabilities that, if solved/created by a program, would enable many other people and organizations to move forward on the technology you care about.

-

Key Risks are “sticking points” (especially facts about the world) that if true, would make your program’s approach infeasible.

Some heuristics for how to conceptualize bottlenecks and key risks:

-

Bottlenecks are obstacles that a program can address, while key risks are things that might prevent you from addressing a bottleneck.

-

Bottlenecks are the rate-limiters on progress in an area. Technical progress is much more abstract than manufacturing, but the analogy to a factory where one machine can process parts much more slowly than all the others can be useful.

-

Technology bottlenecks usually take the form “Some group, A, would do B if C were different.” C is the bottleneck.

-

Key risks are often the reason that other people think what a program is trying to do is impossible.

-

Risks are the assumptions you need to test in order to find a solution to a bottleneck.

There are two ways of dealing with bottlenecks: removing them or going around them. Removing a bottleneck looks like shrinking a critical system from the size of a shipping container to the size of a briefcase. Going around a bottleneck looks like removing the need for that system all together.

Bottlenecks help you identify specific, focused goals that can deliver the highest impact. A coordinated research leader develops hypotheses about how to deal with those bottlenecks. Key risks guide the research you need to do during the program to test your hypotheses and achieve your goal.

When you are designing your program, it’s critical to address the key risks first (this is another framing for the idea of “failing fast.” It’s tempting to start with the easy stuff, but that work may be moot if it turns out you cannot mitigate a key risk (see Tackle the monkey first).

Some examples of technological bottlenecks and key risks:

-

One bottleneck to cheap spaceflight is reusable launch systems. Some key risks for creating reusable launch systems are rocket engines that can restart consistently and control systems to land a rocket successfully.

-

One bottleneck to rapidly developable vaccines was a mechanism for creating specific antigens on demand. A key risk for RNA vaccines to address this bottleneck was a mechanism of encapsulating the RNA.

At the end of the day, you need to drill down to the specific bottlenecks your program can address and the key risks that can be addressed by individual projects within your program. It’s straightforward to say something like “cost is bottlenecking carbon removal,” but drilling down into actionable, technology-based drivers of those costs is hard.

Brass Tacks and Tactics

Identifying the bottlenecks and key risks (especially to an actionable level) is not straightforward.

People don’t often think in terms of bottlenecks and risks, especially at the level of a whole technology instead of their own personal projects. As a result, you can’t just read the answer in literature or ask people, “What are the bottlenecks in your area?”

Here are some tactics for teasing out key risks and bottlenecks:

-

Drawing block diagrams and fishbone diagrams can help identify the causal connections in a system and enable you to trace a high-level bottleneck to an actionable source.

-

Sankey diagrams can help identify what the biggest contributors to some flow is (e.g. identifying why something is expensive).

-

Fermi estimates and breaking down the physics behind a system are helpful for identifying bottlenecks and key risks. For example, “if we were to build this at an impactful scale, it would [require all the niobium in the world]/[cook a human brain with the amount of energy it puts in]/etc.”

-

When you are talking to experts in an area who don’t think that an idea will work, dig into why they don’t think it will work. These are likely to be some of the key risks: if the experts are right, the program is dead and the work is to prove them wrong.

-

When you’re talking to potential “customers” for a program (see Chapter 5, Identifying who cares), try to figure out what subtle things would cause them to dismiss the program’s outputs (e.g., Does it need to fit into a briefcase? Will nobody use it if it’s above $100?). These are also key risks.

-

Make a list of all the risks and assumptions that your program’s success relies upon. Go through and identify which would kill the program if you’re wrong, which would make it significantly harder, slower, or more expensive, and which would be relatively minor complications.

As an example of a good bottleneck analysis, see Adam Marblestone’s positional chemistry bottleneck analysis.

Techno-economic and Impact Analyses

“Techno-economic analysis” is a fancy term for quantitatively trying to answer the question: “Is it possible to eventually produce this technology at a price that people would be willing to pay for it?” I’m going to refer to it as TEA for the rest of this section but don’t assume that everyone will know what the acronym stands for, or even the term “techno-economic analysis” for that matter.

An “impact analysis” quantitatively tries to answer the question, “If you are wildly successful, what difference does it make?”

TEAs and impact analyses are not the same thing, but they are both quantitative ways of exploring what needs to be true for your program to be worthwhile (and whether it is worthwhile at all!) This section covers both because sometimes when people say they want one, they actually want the other and both types of analysis require similar approaches and skills — figuring out benchmark metrics, unpacking systems, dimensional analysis, and Fermi Estimates.

What is a Techno-economic analysis?

There is no “official” definition of a TEA that everybody will agree on. Here, I’m going to define a TEA as an analysis that answers four questions as quantitatively as possible:

-

What are the limits to the technology’s performance (assuming someone successfully built and scaled it)?

-

How much would that performance cost?

-

What performance do other current approaches have?

-

How much do those other current approaches cost?

Things that TEAs ignore:

-

How much the R&D and scaling efforts will cost.

-

The market dynamics and market size for the technology: techno-economic analyses are not business plans! “Market dynamics” here mean things like which organizations are doing what, pricing, margins, etc.

Note that TEAs are application specific. This fact will be annoying if you’re trying to create a general-purpose technology. However, it’s useful for forcing you to narrow down your program’s focus to a specific domain which you will almost inevitably need to do to sell it anyway: TEAs can help you compare the impact that the technology would have on different domains.

For example, a TEA for a new way of making steel might show that the resulting steel is 15% stronger than other production methods, but cost 5% more than common methods. That’s good to know! In order for the program to be a good idea, you need a good sense of whether the strength increase is worth the cost increase and for whom. Or whether there are other properties the new steel has that people would pay for.

(The program’s worthwhileness also depends on the impact of having 15% stronger steel.)

What is an impact analysis?

An impact analysis tries to put numbers on the questions:

-

If this program were wildly successful in achieving its technical goals, what difference would that make?

-

What needs to be true in order for that to happen?”

For example, an impact analysis for a program to create a new kind of carbon capture technology might show the following: even if the program were wildly successful and the new technology were adopted as hard as people could, its impact would be limited because it requires a catalyst metal that is so rare that even if you used all the world’s entire supply to make the technology its capacity would only be able to remove 0.001% of the world’s yearly carbon emissions.

Now, that result doesn’t necessarily mean the program isn’t worth doing. What it does mean is that either you need to focus your program heavily on replacing the catalyst with something more common, increasing the supply of the catalyst, or have a strong argument why exogenous factors will increase supply of the catalyst.

If you hadn’t done the analysis, either someone would eventually point out the catalyst problem and crater support for the program, or you would complete the program and have almost no impact!

Why you should do a TEA

You should do a back-of-the-envelope TEA both for yourself and external stakeholders.

Reasons you should do it for yourself:

-

So you’re not fooling yourself and wasting your time pursuing a technology that will never actually be useful.

-

To force yourself to make explicit assumptions about what needs to happen to make the technology viable, what matters about the technology, and what you expect the state of a world in which the technology is useful.

-

To identify the biggest risks and gaps between the state of the technology now and where it needs to be in order to be useful.

Reasons TEAs are important for external stakeholders:

-

TEAs create a legible scenario where the technology is useful and makes it clear what assumptions underlying that scenario.

-

The analysis makes it clear that you understand where the bottlenecks are and the biggest gaps you need to close.

-

TEAs should actually make it clear that if your program is successful, it will make a meaningful difference in something important and why it’s a better approach than alternatives.

You could create a rocket that could get to Mars in a week, but if it cost a trillion dollars per flight it would never actually exist in the real world. (Unless someone discovered something worth trillions of dollars on Mars, of course.) DARPA successfully created artificial blood, but it was so much more expensive than normal blood with no prospect of getting cheaper, that ultimately it was never used. TEAs are meant to prevent these situations or at least make sure you’re walking into them with eyes wide open.

When you should do TEAs and impact analyses

TEAs only make sense in the context of building technologies and creating explicitly “useful” things — if the goal of a program is to create fundamental knowledge about the universe, the concept of costs beyond the scope of the program or performance don’t make much sense.

You should try to do an impact analysis for any program. It forces you to have a precise theory of change, can uncover unintuitive things your program needs to prioritize to have impact, and makes a great sales pitch.

Obviously there are many impacts that metrics cannot capture — how would you do an impact analysis on a program to discover what caused the Big Bang? However, these cases are more rare than you might think and often not doing an impact analysis is an excuse for lazy thinking.

How to do a techno-economic analysis

You can do TEAs with arbitrary amounts of detail, but roughly they fall into two categories: back-of-the-envelope calculations and hardcore analysis. The steps are mostly the same for both so first I’ll give a broad picture of what goes into both kinds of analysis, walk through an example of a back-of-the-envelope analysis and then flag what you can do to be increasingly hardcore.

-

Write down the key metrics that matter in the domain (ie. the metrics that people who use it will care about). These might be a property of the technology itself, or a property of something that the technology is an input to or a component of.

-

Create a spreadsheet (everything further down goes into this spreadsheet). For all numbers, make sure to write down units, your source for that number, and assumptions that are going into it.

-

Write down/derive the inputs to making your technology. These inputs fall into two broad categories:

-

Capital costs: these are the factories, containers, and machines that someone needs to buy once to create the thing. They are fixed regardless of how much of the thing you do or make.

-

Operating costs: these are costs that grow proportionally to the amount of stuff you make — labor, materials, and energy.

-

-

Use these costs to make a reasonable estimate of how your technology could perform on the key metrics you identified.

-

Identify a comparable technology available today.

-

Estimate (or just look up) how the comparable technology performs on your key metrics and on costs.

-

Compare the performance and cost of the current technology and your proposed technology.

In step #1, ideally you can find a metric that you can use to compare across technologies, regardless of how they achieve what people want, like $/kg to orbit or $/kWh — these are called “performance-equivalent functional units.” However, in some cases (like the antimatter propulsion example below) there’s no single metric that matters — sometimes it makes more sense to just present a few performance metrics instead of creating one convoluted metric that tries to capture everything. One of those performance metrics should always involve cost or it looks like you’re trying to pull something. However, sometimes people are willing to pay more for more performance.

Example of a back-of-the-envelope analysis

The goal of a back-of-the-envelope analysis is to be accurate to within an order of magnitude and to highlight how the inputs to a technology are related.

Let’s walk through an example of a back-of-the-envelope analysis. In this case, we’re going to assume we have an idea for a program to produce antimatter for space propulsion at scale. It’s in large part based on Casey Handmer’s work here (Casey is, incidentally, the master of this kind of analysis).

When we’re talking about big spacecraft, a thing many people care about is getting to Mars. The two big things about getting there are how long it takes and how much it costs. There’s not a great way of combining these into a single metric.[2] So let’s keep them separate for now.

We’ll use SpaceX’s starship as a benchmark technology. We’re going to use its numbers to get the mass/volume of a standard spacecraft that we want to send to Mars. We’re also going to look up how much it costs to fuel it up ($240,000) and how long it would take to go to Mars (259 days).

We’re then going to look at antimatter propulsion. With some assumptions we can figure out that an antimatter-powered starship could create 32000 m/s of delta-v and get us to Mars in 14 days. That’s pretty good!

How much is that performance going to cost?

First let’s think about the capital costs. Here it gets super hand-wavy because as of writing this, I don’t know how we would produce antimatter at scale, but proposals for how to do it involve a lot of vaguely similar hardware to fusion reactors (superconducting magnets, vacuums, etc) so let’s assume that an antimatter factory costs roughly as much as how much a Commonwealth Fusion plant is expected to cost: $7.6B (yes — this is assumptions on assumptions). In real life, you should have a much better sense of at least the components of the capital needed to create a technology.

Now, let’s think about operating costs. We can start with the amount of antimatter we’re producing per year — let’s assume the factory can produce four flights’ worth of antimatter. In reality you should have some justification for the capacity you can achieve at scale but here I’m just literally making it up. Note that this is eight orders of magnitude more than the most antimatter that has ever been produced in a year.

Assuming we have an amazing process that is 50% efficient we can calculate how much energy that will take and then how much that energy would cost assuming we’re making it all from cheap solar. As for other operating costs (labor, maintenance, etc) I’m just going to totally hand wave and assume it’s 0.01% of the total capital cost per year. This gives us a cost/year of both energy and other operating expenses.

We can find a $/trip number by combining the operating costs and the capital costs. We can get a $/year number from the capital costs by assuming that all the capital equipment will last 15 years and dividing the cost by the number of years it will last. This is called the “amortized cost.” (Just dividing capital costs by how long you expect the equipment to last is a very naive way of thinking about capital costs — a hardcore analysis will calculate but it’s fine for back-of-the-envelope calculation.) We can then add operating and the fractional capital costs to get how much running the factory will cost per year therefore a quarter of that cost is the $/trip. (Remember we assumed our capacity was four flights of antimatter per year).

That calculation leads to it costing $127,000,000 for fuel for an antimatter-powered flight compared to $240,000 for a conventionally fueled flight. That’s a lot more expensive!

So to reiterate our final metrics:

-

A ‘benchmark’ conventionally-powered starship flight to Mars takes 259 days and costs $240,000 in fuel.

-

An antimatter-powered flight to Mars takes 14 days and costs $127,000,000 in fuel.

Note that doing this whole analysis took about an hour and a half — most of that was looking up numbers. Back of the envelope calculations should be fast.

This example illustrates some important things that will come up frequently when doing techno-economic analyses:

-

The analysis suggests that the new technology (even once it works) will be drastically more expensive than the alternative, even if it performs much better. In order for working on the technology to be a good idea you either need a good explanation of who wants the improved performance enough to pay the price or a clear idea of what the major cost drivers are and a pathway for them to become cheap enough for the new technology to become cost competitive. (See tornado charts in the hardcore section for more about that).

-

You will need to make a ton of assumptions — that’s fine, as long as you make it clear where you made them.

-

Pay attention to units! Often if you can’t just look up a quantity, you can figure out how to calculate it by looking at the units.

Making the analysis more hardcore

To a large extent, making an analysis more hardcore involves breaking systems and assumptions into their constituent components. Some specific things you can do include:

-

Capital costs

-

Break out the capital costs into different components

-

Instead of treating capital costs as a lump sum for an asset that lasts forever, treat them as a regular payment over the finite lifetime of a factory or piece of equipment.

-

Instead of assuming that you pay off the same amount of capital costs every year, you can use a more sophisticated financial model.

-

-

Operating costs

- Break the technology out into its constituent parts — this is known as a “bill of materials”

-

Do sensitivity analyses — these are analyses that show how much cost and performance can change based on different assumptions.

-

Create tornado charts – horizontal bar charts that illustrate how sensitive performance and cost is to different parameters.

-

Do Monte Carlo simulations that randomly vary different parameters in order to capture the range and distribution of potential outcomes.

-

An example of a tornado chart.

(At some point in the future, I’ll expand this section to do a walkthrough of a hardcore TEA analysis but in the meantime, here is an example of a pretty thorough analysis.)

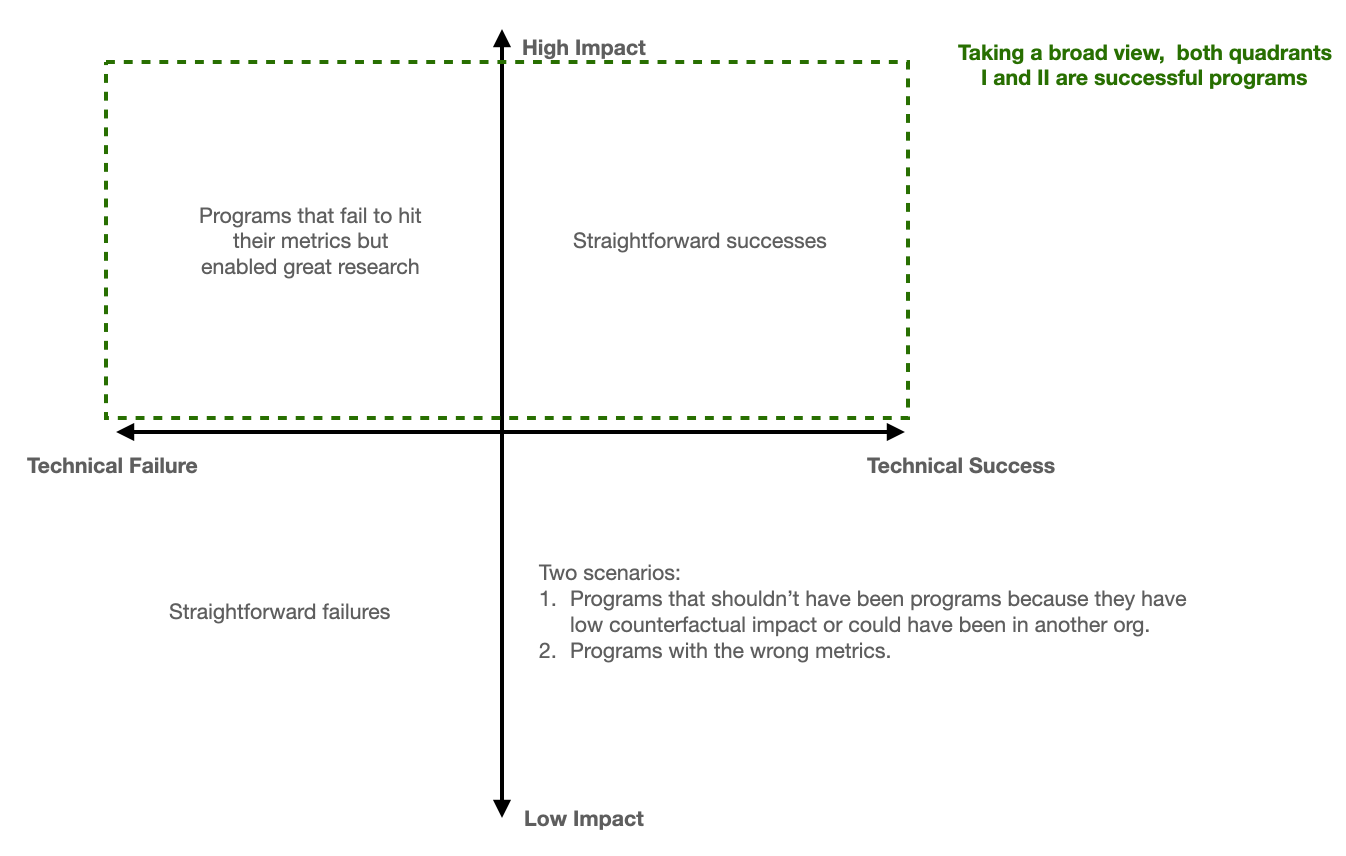

Conclusion