Whence Systems Research?

What the the heck is systems research? Why is it important? Why are we bad at it? How could we do better?

- What is systems research?

- Systems research inherits attributes from both systems and research (surprise!)

- Systems research is necessary when a system is not modular.

- Systems research requires a lot of work that is expensive and uninteresting.

- People consistently underrate how hard it is to integrate components into a system.

- Systems research in a given domain gets harder as that domain matures.

- Systems Research is Important

- Current institutions do not support systems research

- How do we do better systems research?

- Thanks

I’ve struggled to articulate the flavor of research that PARPA does, and why it’s different from the profusion of research that we do see happening. I’ve hand-waved a bit at things that are “too researchy to be a startup and too engineering-heavy to be in academia” but this is not quite right — if things go to plan, some of the work we do will be collaborations with both startups and academia. Instead, the right phrase for what we’re doing is “systems research.”

It’s one of those terms that was once so common that people didn’t need to define it, but has fallen so far out of use that it no longer means anything to most people. I want to unpack what systems research is and how it differs from other research, why it’s important, how current institutions fall short on the systems research front, and some hunches around how Speculative Technologies plans to enable more of it.

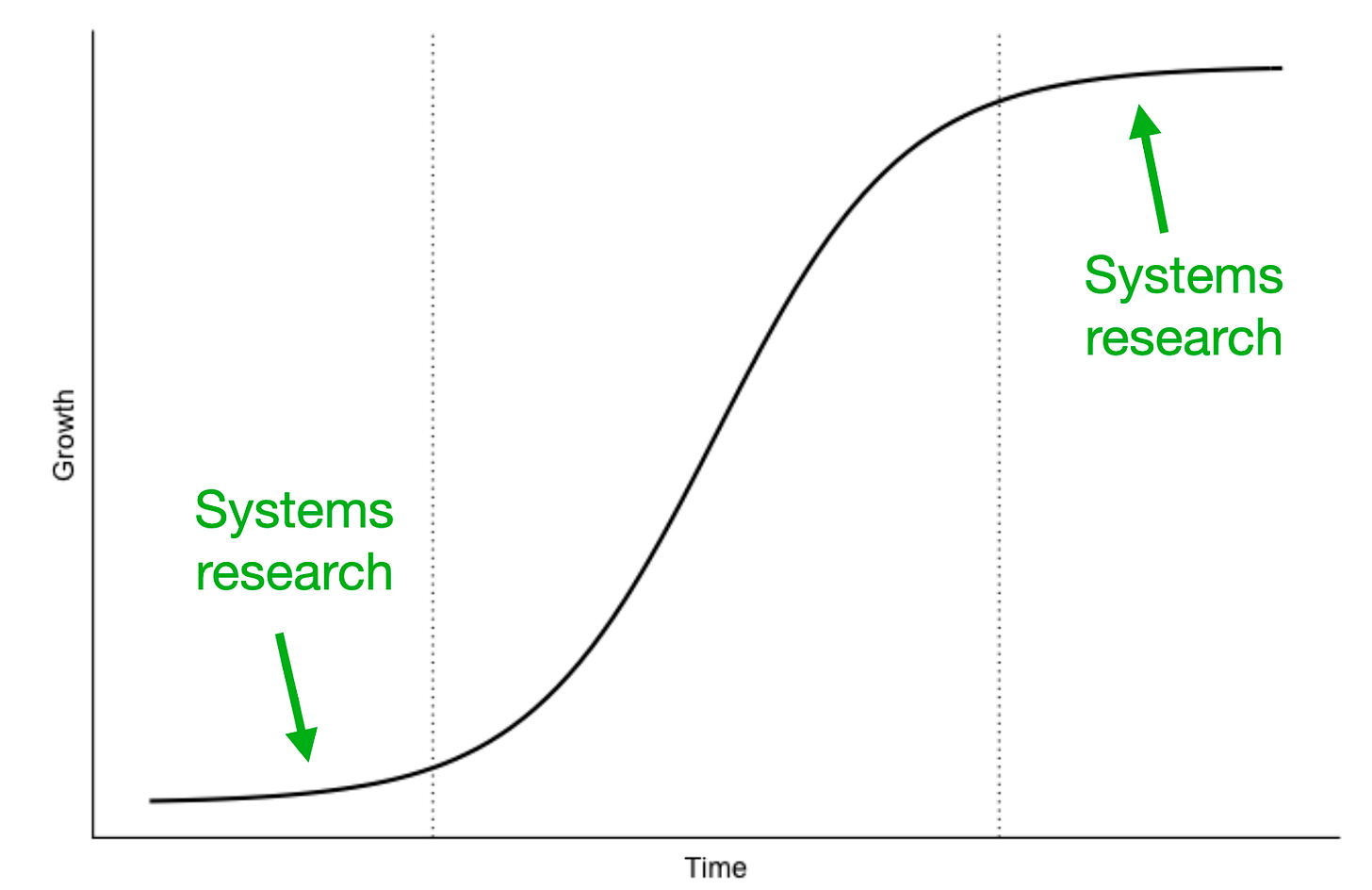

The use of the term systems research roughly reflects how much of it gets done

The use of the term systems research roughly reflects how much of it gets done

What is systems research?

Any attempt to rigorously define either “systems” or “research” ends as a hand-wavy mess, so, as you might expect, “systems research” is equally hard to pin down. Instead of trying to define systems research, the important things to focus on are the attributes of systems research that distinguish it from other research and make it especially tricky.

Systems research inherits attributes from both systems and research (surprise!)

Systems research inherits a core attribute from systems: the fact that we’re talking about a technology with several discrete components whose actions depend on the output of other components. A single molecule drug is not a system, but the factory that makes it is. The core attribute that systems research inherits from research is unpredictable outcomes — if you know that what you’re doing will work, how it will work, and what you need to do to get there, it’s not research.

Systems research is necessary when a system is not modular.

Modularization allows different groups to work on different pieces of a system without coordinating. When you have good interfaces, changes in one component don’t require adjusting any other component. A power company can change from coal to nuclear power generation without telling lightbulb producers anything, as long as they both stick to local standards for voltage and frequency .

However, many systems aren’t modular. They’re a disgusting mess of interconnected dependencies. Poor modularity and leaky interfaces mean that in order to improve the entire system, changes in one component require adjusting all the other components. Propagating these changes doesn’t improve the other components and is often painstaking or boring: for those of you with software experience, imagine needing to go through and change the output type of every function in an entire library.

Systems research requires a lot of work that is expensive and uninteresting.

If changing one component is a clear win for the system, it’s straightforward to justify the work of propagating that change to the rest of the system. But when you’re doing research, you often don’t actually know what the outcome of the change is going to be until you propagate it. As a result, you end up needing to propagate many changes, many of which don’t actually work out. This experimental system-adjusting is the core of systems research.

When you’re trying to change a large system, a big chunk of the work doesn’t involve the actual interesting bits that you’re changing. Instead, you need to spend a lot of effort propagating changes through the entire system. Imagine an event that requires the editing of thousands of wikipedia pages to reflect it. Or, in software, it’s a change that requires you to mindfully touch a majority of the files in a large repo.

Switching a car from an internal combustion engine to an electric motor requires not just swapping out the obvious things (replacing the gas tank with a battery) but re-architecting the entire car — what do you do with the space where the engine used to be? How do you deal with the very different center of mass? How do you do steering when motors are located at the wheels? How do you refuel? Figuring out all of these answers requires work.

In this excellent presentation from Rob Pike about the decline of software systems research, he says:

Estimate that 90-95% of the work in Plan 9 [a unix successor being developed at Bell Labs] was directly or indirectly to honor externally imposed standards.

That is, to make a system functional after a change, you need to do a lot of work. A lot of the expensive uninteresting work doesn’t directly improve the system and may in fact be completely “wasted.” This waste runs headlong into cultures that prioritize justification and efficiency. The inherent inefficiency of systems research is another reason why I believe efficiency is overrated .

Propagating changes through a system is why systems research takes a surprising amount of time. Point changes take an amount of time that’s either independent of the number of components in a system or perhaps proportional to the number of components. Systems-level changes take an amount of time that scales like a power law with the number of components in the system because in the worst case scenario you need to pay attention to every pairwise interaction between every two components.

The expensive, boring work in systems research is more justifiable when the system has a clear metric. SpaceX and Bell Labs are/were able to justify impressive systems research in part because of clear systemic metrics ($/kg to orbit for SpaceX, of calls, people, and connected locations for the Bell System). However, one key point of disruption theory is that new systems often win on completely different metrics than the ones they replace. (Incidentally, this metric shifting is also core to Kuhnsian paradigm shifts in science). As a result, it’s often hard to leverage metrics to justify systems research when you’re building new systems.

In the absence of a metric, a serious context of use is important for systems research in large part because it acts as a forcing function for the expensive uninteresting work. A context that someone cares about casts into a harsh light the parts of the system that need to be updated based on changes elsewhere — “you made a change over here that broke this other thing I really care about.” Without a context that someone cares about, it’s easy to ignore how the change of interest affects other parts of the system.

People consistently underrate how hard it is to integrate components into a system.

Hofstadter’s Law is the self-referential rule of thumb that “it always takes longer than you expect, even when you take into account Hoffstadter’s law.” If a system is involved, integration is a major culprit in actualizing the law.

If you’ve ever done software development, you know that even when all the components of a system nominally “work” in isolation, even when there are good interfaces and standards that everybody has followed, the chance that when you hook everything together for the first time the system just works is basically zero. Same thing with people — even if everybody nominally agrees on what needs to happen, somebody needs to spend a lot of time on coordination. And yet, people consistently fall into the trap of thinking “well, the parts all exist, it’s just a matter of hooking them together.”

The integration difficulties are exacerbated in physical systems when you can’t just edit some code or have a conversation to get two components to work together.: Although trains and planes required systems research, I would argue that automobiles didn’t ] It’s even worse when the system is unlike anything people have done before and involves unintuitive interfaces.

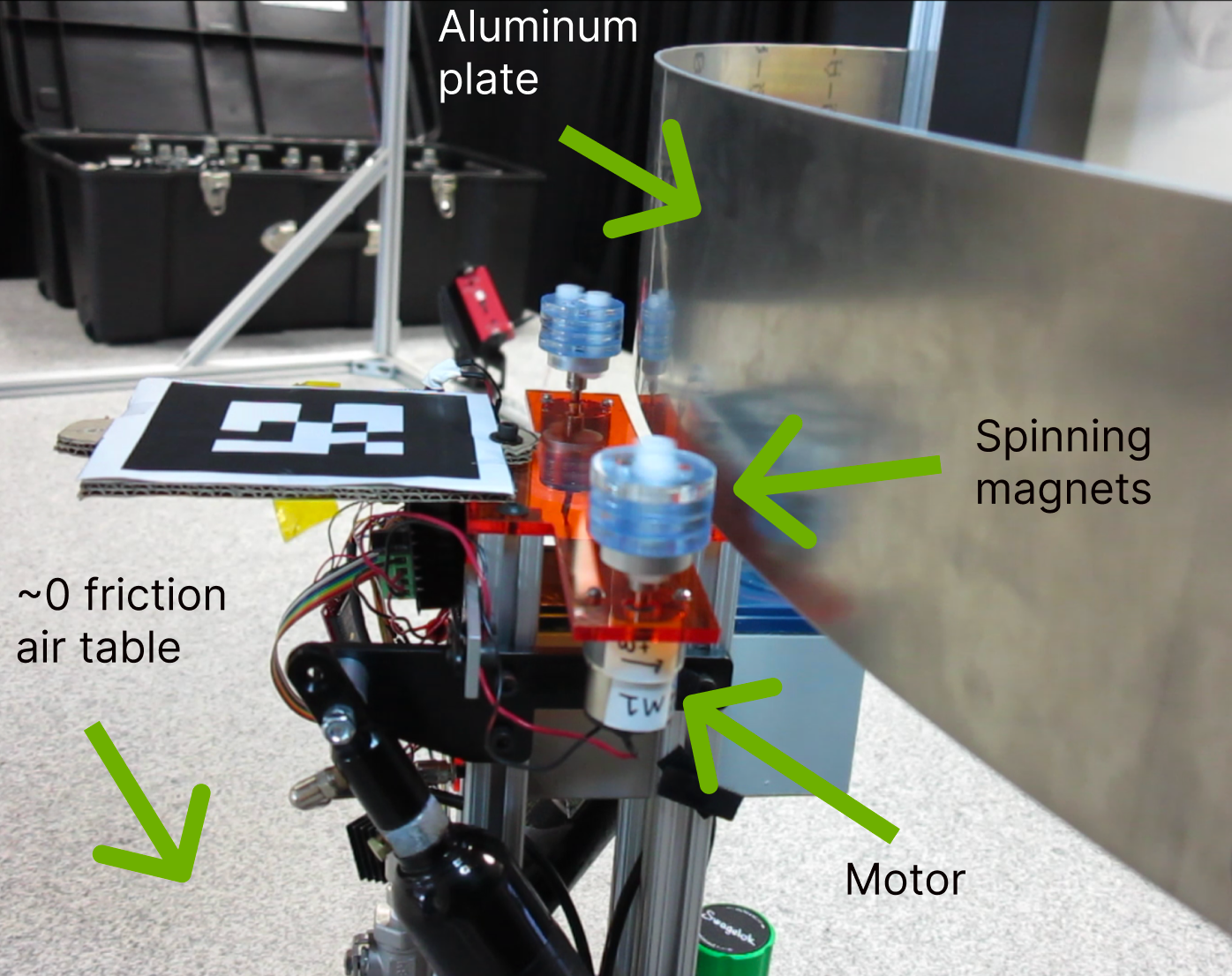

To illustrate some of the schedule-inflating phenomena that pop up during systems integration, I’ll reference a derpy spinning-magnet propelled space-robot prototype I built in grad school.

- Interfaces are rarely perfect. When I first plugged the wire connectors together and issued the spin command to the motors … nothing happened. It turns out that one of the wires had become dislodged in its housing preventing the circuit from completing.

- Interfaces are almost always leaky. The first time I turned the motors on (after fixing the wire problem), the robot almost ripped itself apart. It turns out that the motors were powerful enough that if their voltage went from 0 to the full 12V in one time step, the acceleration was violent enough to damage the rest of the robot. The fix was to go back into software and change “on” to be a gradual voltage ramp. The interface between the software and the motors was leaky because the properties of the motors reached back and affected how the software needed to work.

- Interfaces are rarely correct on the first go. I realized that I needed to not only be able to send voltage commands to the motor at each time step, but I also needed to monitor actual current consumption, which required adding additional wires and resistors.

- Systems often exhibit emergent behavior. When both motors were turned on at a certain speed, they resonated the plastic piece connecting them, causing them to wobble up and down and side to side. This emergent behavior only happened when the entire system was assembled. It’s possible to predict emergent behavior through models and simulations, but those take time and the more complex the system, the higher the chances that simulations won’t capture all of the emergent behavior.

All these issues were easily fixed, but they add up — adding weeks to a timeline that was only a few months long. Many of them were embarrassingly rookie mistakes, but interface issues plague even the professionals — the crash of the $125m Mars Climate Orbiter because of a metric-standard unit mismatch across an interface comes to mind. Fixing interface problems or carefully checking everything so they never arise takes time.

At a recent workshop we ran, a group of experienced technologists were talking about timelines for building a system for building microelectronics entirely different from our current paradigm (the details of which I will leave vague until we publish the technology roadmap). The level of optimism about timelines (from the people who think it’s possible at all) because many of the components basically exist was shocking!

Systems research in a given domain gets harder as that domain matures.

Omar Rizwan points out:

‘Industrial systems’ (Unix, Linux, the PC, the Web, etc) accrete advantages, create user expectations of functionality, build up more complicated interfaces. It’s hard to make a Web browser now! You have to support a lot of stuff that’s out there in the world. It was hard to make a new PC operating system in 2000 and even harder in 2010, there was so much hardware out there to support. (hard = requires [a] long timeframe, capital-intensive, probably other things too). A lot easier to do that work (of creating a de novo system) when the domain is very early-stage.

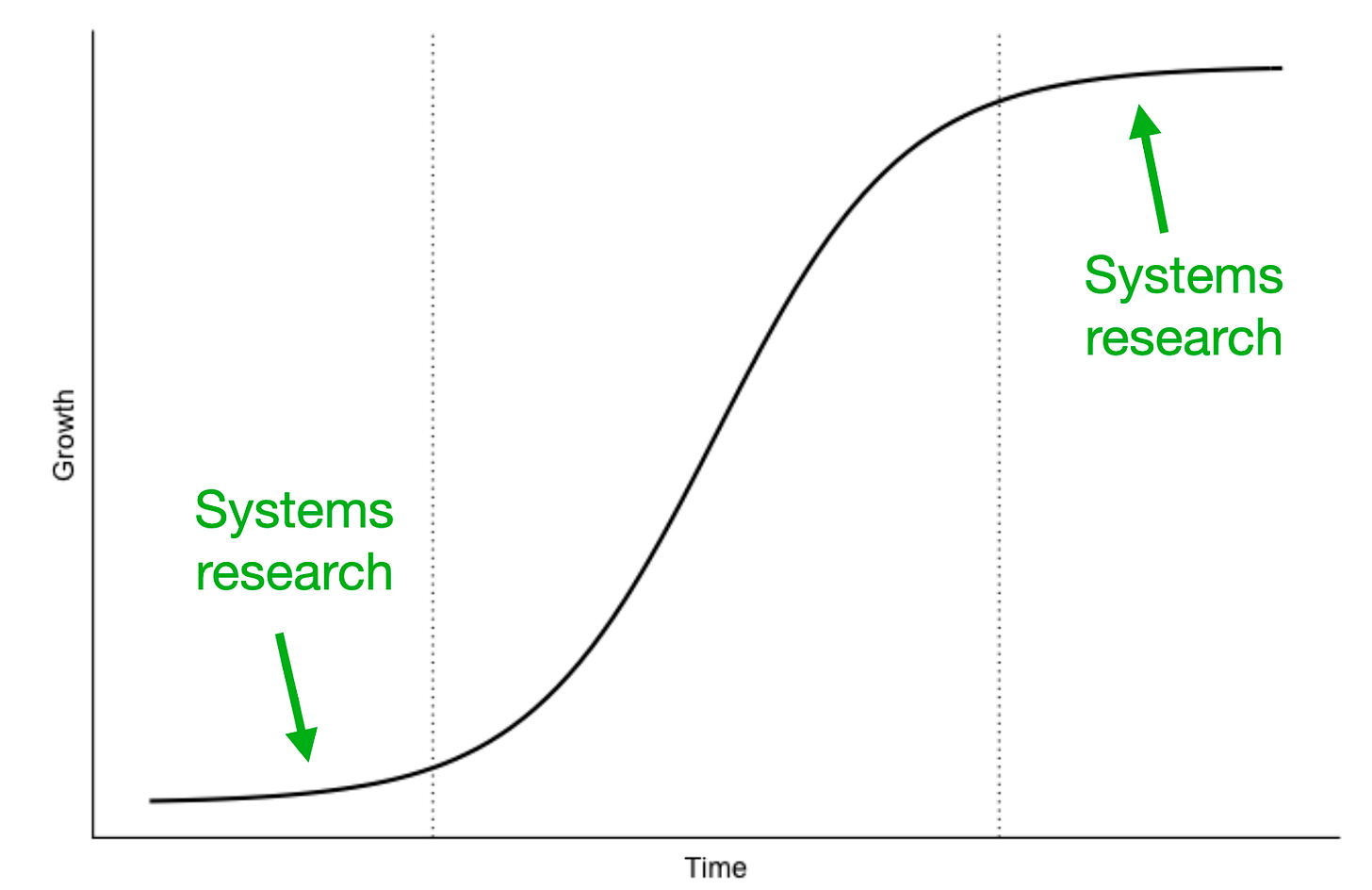

This effect is at least one of the causes behind the well known technological “S-curve,” where performance increases plateau after a period of rapid growth.

On the note of S-curves, my (extremely speculative) hunch is that getting a system to a modular-enough state where component work can make significant improvements contributes to the shift from low growth rates to high growth rates.

Systems Research is Important

In large part, the best argument for the importance of systems research is simply pointing to the past. Here are some things that required simultaneously inventing several interdependent components before the system would work at all (ie. systems research):

- The Unix Operating System

- The Internet

- Planes

- Trains

- Bessemer steel

- Nuclear Power … the list goes on.

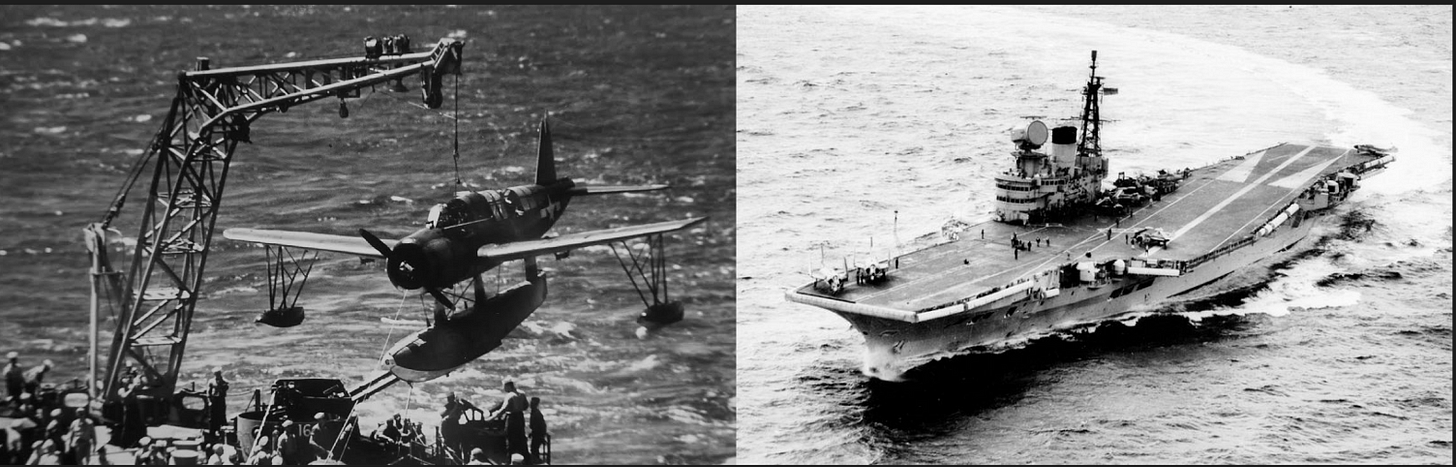

A more abstract argument for systems research’s importance goes something like this: new technology is often much more useful when it generates a new system instead of being an additional layer on top of an existing system or a drop-in replacement for an older technology. Electric motors became far more effective when factories were refactored to have many workstations using small electric motors instead of the original use of one giant electric motor as a drop-in replacement for a steam turbine. Military aircraft only reshaped naval warfare when we created entire ships designed to support them instead of strapping pontoon planes onto battleships.

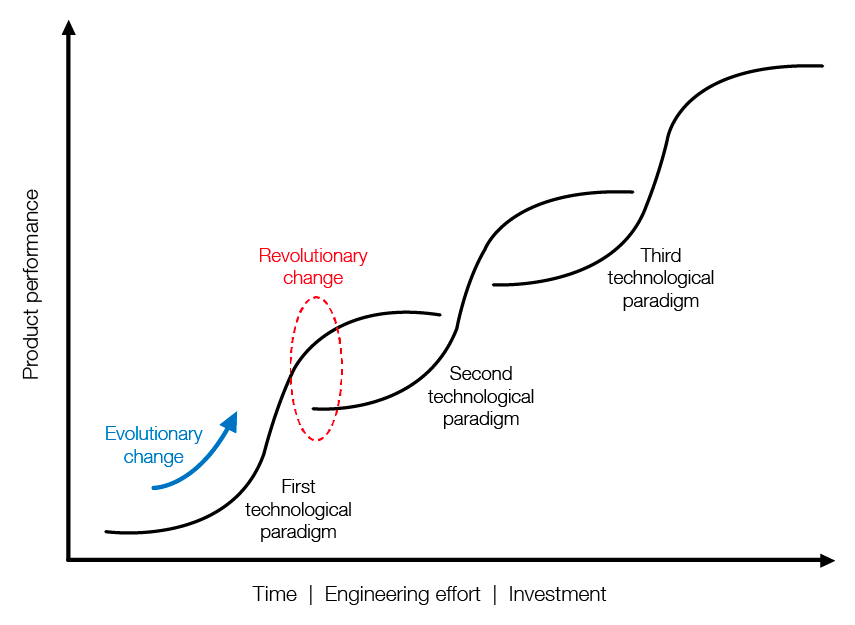

Systems research is how we avoid stagnation. Improving a system becomes harder as it matures and often the way to push our capabilities is to jump to an entirely new system that still has a lot of room for improvement. This move creates both disruption theory and the pattern of overlapping S-curves that pervades writing about innovation.

From Juan Cols via Jason Crawford

From Juan Cols via Jason Crawford

You don’t find these new S-curves deep in the jungle; many technologies need systems research to even get to the bottom of the curve.

Of course, it’s great when you don’t need to do systems research to exploit a new technology: LEDs enable more efficient, longer lasting, and programmable lighting without reinventing and replacing the socket system invented by Edison in 1889 .

However, Venkatesh Narayanamurti and Jeffery Tsao point out in The Genesis of Technoscientific Revolutions :

Solid State Lighting (SSL) is a compact technology compatible with much smaller sockets, but the first wave of SSL are virtually all back- ward-compatible “retrofits” with the larger Edison socket. Only when a new socket standard evolves will SSL be free to unleash its full capabilities.

It suggests a maximalist position that all locked-in interfaces are a crutch. We could unlock a ton of progress if we could make systems research and the subsequent retrofits massively easier and cheaper. I don’t think the maximalist position is correct, but it makes you think.

Current institutions do not support systems research

The repeated experimental change-propagation that characterizes systems research doesn’t just require a lot of work; a lot of that work isn’t publishable or lucrative and it’s especially hard to justify. As a result, most of the institutions that enable research struggle with systems research.

Academia: Propagating changes through a system is not novel

While changing a single component can be novel, updating the rest of the system to account for that change is usually academically uninteresting. Propagating changes through an entire system drives down the ratio between publishable work and total work. As a result, you see a lot of academic “component demos” that show how a tweak to one piece of a system or another could potentially have an effect. This in turn leads to a lot of research feeling like it’s creating lego pieces without any idea of how they’ll be used.

Theoretically, another organization that does systems research could pick up those lego pieces and integrate them into a system, but that rarely happens. Other institutions have their own incentives against systems research.

Phillip Guo’s PhD Memoir illustrates both the grindy work required by systems research and the academic frustration with it:

I spent the first four months of my Ph.D. career painstakingly getting Klee [a research-grade bug finding tool] to analyze the code of thousands of Linux device drivers in an attempt to find new bugs. Although my task was conceptually straightforward, I was overwhelmed by the sheer amount of grimy details involved in getting Klee to work on device driver code. I would often spend hours setting up the delicate experimental conditions required for Klee to analyze a particular device driver only to watch helplessly as Klee crashed and failed due to bugs in its own code. When I reported bugs in Klee to Cristi, he would try his best to address them, but the sheer complexity of Klee made it hard to diagnose and fix its multitude of bugs. I’m not trying to pick on Klee specifically: Any piece of prototype software developed for research purposes will have lots of unforeseen bugs. My job was to use Klee to find bugs in Linux device driver code, but ironically, all I ended up doing in the first month was finding bugs in Klee itself. (Too bad Klee couldn’t automatically find bugs in its own code!) As the days passed, I grew more and more frustrated doing what I felt was pure manual labor— just trying to get Klee to work—without any intellectual content.

Companies: Systems research is inefficient

Companies do well when a problem or potential improvement can be framed in terms of a bottleneck. People love when there’s “one weird trick”: it prioritizes work, focuses the organization, and creates a compelling narrative for investors. Systems research is the opposite of this: “there’s a bunch of stuff we need to mess around with and it’s not clear which work will matter and which won’t, but the only way to find out is by doing it.” Put differently, systems research is inefficient.

If a company (either a startup or a large organization) has the choice between two kinds of ideas:

- An idea where you can know going into it that you’ll profit if you succeed at one specific thing.

- An idea where you need to do a good chunk of work to get to a point where the idea of bottlenecks even makes sense.

From a return on investment standpoint, #1 makes much more sense. Systems research can pay off but the timescale combined with the deep uncertainty makes it unpalatable for most investors and executives.

To make matters worse, systems often need to take performance hits to get out of local optima . Early transistors were worse than contemporary vacuum tubes; early cars were worse than horse-drawn carriages; etc. So in order to support systems research, not only do companies need to sit with uncertainty for a long time, they need to do that while staring at metrics that look terrible compared to what they’re already doing.

Government: systems research is hard to justify

Governments will begrudgingly support systems research if there’s a clear widget that a specific government agency wants, like a stealth fighter or a Saturn V rocket, and it’s clear that systems research is the only way to get there. Even then it often requires some heroic individual effort to drive the project to completion.

But most systems research has the flavor of “we need to do this useless thing because we think that it might address the issues with this other useless thing which would then get a system working that is mostly worse than what we already have, but might get better than it one day.” In other words it’s hard to justify systems research without several leaps of faith.

The hard-to-justify nature of systems research runs headlong into the nominal imperative of (at least western, democratic) governments to have chains of accountability. Even if a program officer buys into the system’s potential, they need to be able to justify it to a director who needs to be able to justify it to another director who might need to justify it to a congressperson who might need to justify it to their constituents.

How do we do better systems research?

At this point, we’ve established what systems research smells like and why it struggles in the modern innovation ecosystem. If you buy that systems research is both real and important, the obvious question is “so how do we change the situation?”

It’s always so much easier to point out problems than offer up concrete solutions! To a large extent, my answer is “I’m working on my best hunch to the solution by building a private ARPA.” Concretely:

- Enabling the work to coordinate systems work — it’s a full-time job that requires a lot of freedom to make good on a vision.

- Using money to align different actors around a specific system.

- Developing better coordination mechanisms — better technological roadmaps, workshops, etc.

- Figuring out how to work within and around the IP system to prevent secrecy and patent silos from killing systems.

- Being unsatisfied with only components.

- Focusing on integration-based definitions of “done.”

- Having patience and being willing to fund work that isn’t constantly producing flashy results.

- Building an institution that continually refines how to do all of these things better and maintains that tacit knowledge.

Beyond that here are some fuzzy ideas:

- More generally, funding organizations that care about systems research could give out larger, less earmarked chunks of money to enable systems researchers to shift funds around to unexpected areas and pay people to do the grungy work.

- Stop enshrining efficiency.

- Think about other ways of evaluating a not-yet-existent technology’s potential besides “of what use is it?” Some others might include: what kind of length/time/energy scales does it let us access that we couldn’t before? How different is the process from other things? (Drastically different processes may offer more S-curve to climb).

The broadest point is that there are many different activities that fall under the umbrella of “research.” Systems research is just one. Each one plays a different role in some broader ecosystem. There is a much bigger project to be done to make explicit different kinds of research activities and understand how to nourish them.

Thanks

To Omar Rizwan and Pritha Ghosh for conversations that generated some of the ideas in this piece and to Omar Rizwan, Evan Miyazono, and Adam Marblestone for reading drafts.